This month we dive into the history of Dell Computer Corporation, one of the biggest PC and server companies in the world! Michael Dell gives a first-hand perspective of all of Dell’s big successes and failures throughout the years and his intense battle with Carl Icahn, over the biggest management buyout in history.

Tech Themes

Be a Tinkerer. When he was in seventh grade, Michael Dell begged his parents to buy an Apple II computer (which costs ~$5,000 in today's dollars). Immediately after the computer arrived, he took the entire thing apart to see exactly how the system worked. After diving deep into each component, Dell started attending Apple user groups. During one, he met a young and tattered Steve Jobs. Dell began tutoring people on the Apple II's components and how they could get the most out of it. When IBM entered the market in 1980 with the 5150 computer, he did the same thing - took it apart, and examined the components. He realized that almost everything IBM made came from other companies (not IBM) and that the total value of its components was well below the IBM price tag. From this simple insight, he had a business. He started fixing up a couple of computers for local business people in Austin. Dell's machines cost less and delivered more performance. The company got so big (50k - 80k revenue per month) that during his freshman year at UT Austin, Dell decided to drop out, much to his parent's dismay. On May 3rd, 1984, Dell incorporated his company and never returned to school.

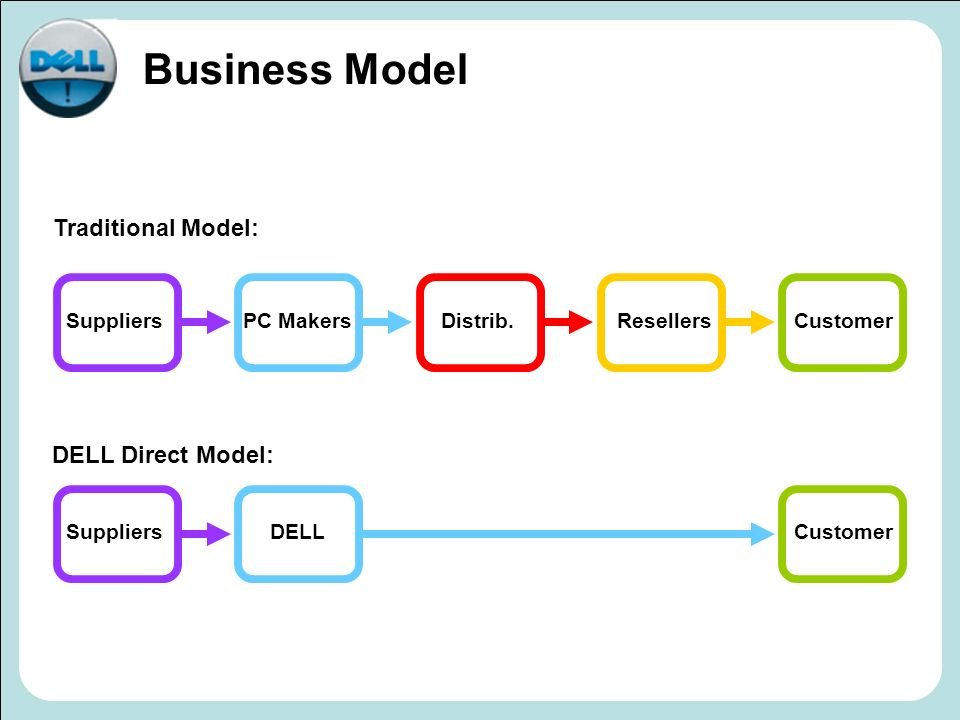

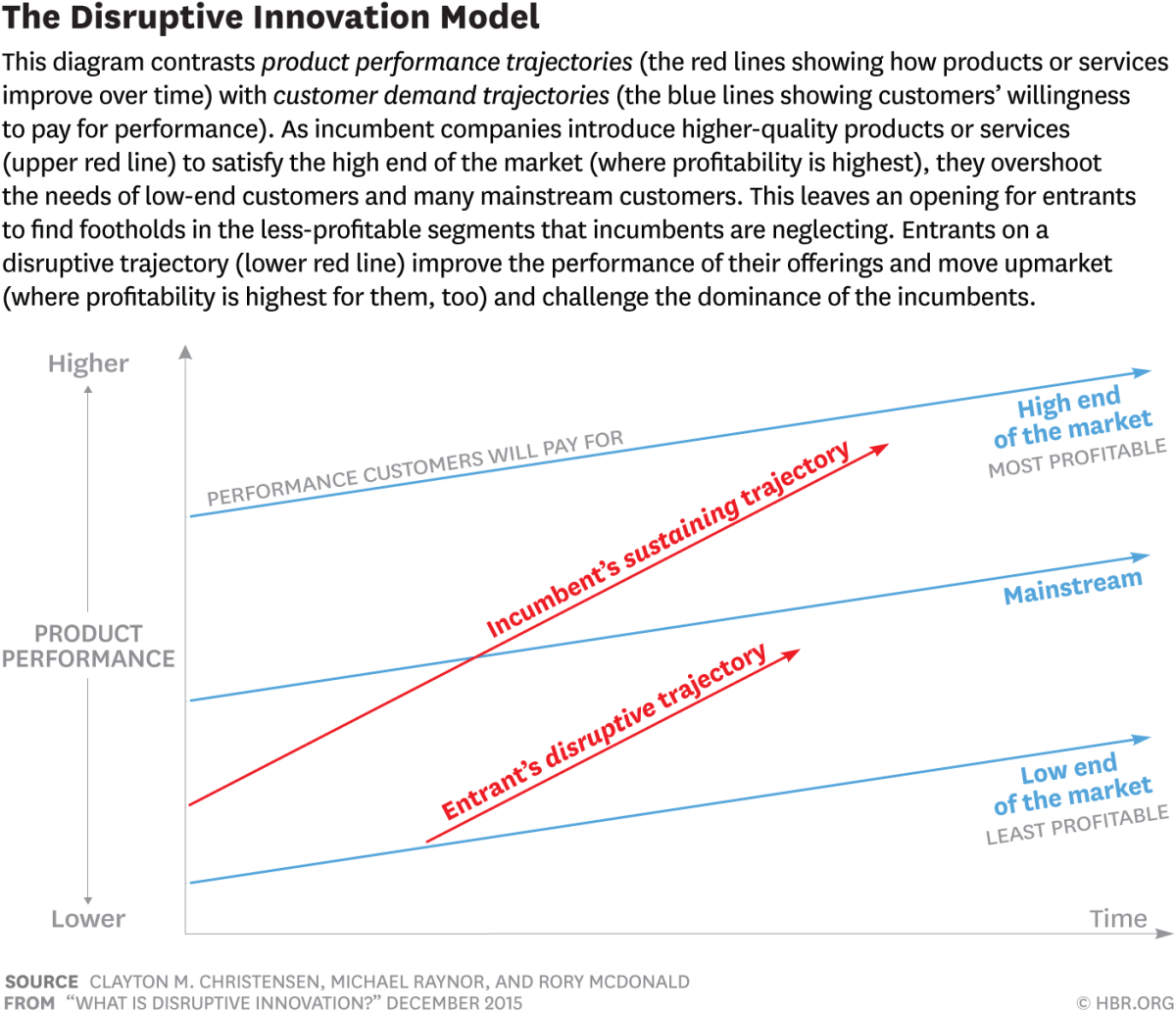

Lower Prices and Better Service - a Powerful Combination. Dell Computer Corporation was the original DTC business. Rather than selling in big box retail stores, Dell carried out orders via mail request. When the internet became prominent in the late 90s, Dell started taking orders online. After his insight that the cost of components was significantly lower than the selling price, he flew to the far east to meet his suppliers. He started placing big deals and getting better and better prices. This strategy is the classic low-end disruption pattern that we learned about in Clayton Christensen's, The Innovator's Dilemma – a lowered-priced competitor that offers better service, customizability starts to crush the competition. Christensen is important to note that the internet itself was a sustaining innovation to Dell, but very disruptive to the market as a whole: "Usually, the technology simply is an enabler of the disruptive business model. For example, is the Internet a disruptive technology? You can't say that. If you bring it to Dell, it's a sustaining technology to what Dell's business model was in 1996. It made their processes work better; it helped them meet Dell's customers' needs at lower cost. But when you bring the very same Internet to Compaq, it is very disruptive [to the company's then dealer-only sales model]. So how do we treat that? We praise [CEO Michael] Dell, and we fire Eckhard Pfeiffer [Compaq's former CEO]. In reality, those two managers are probably equally competent." If competitors lowered prices, Dell could find better components and continually lower prices. Dell's strategy led to many departures from the personal PC market – IBM left, HP acquired Compaq in a disastrous deal for HP, and many others never made it back.

Layoffs, Crises, and Opportunities. Dell IPO'd in 1988 and joined the Fortune 500 in 1991 as they hit $800m in sales for the year. So you would think the company would be humming when it hit $2B in sales in 1993, right? Wrong. Everything was breaking. When a company scales that quickly, it doesn't have time to create processes and systems. Personnel issues began to happen more frequently. As Dell recalls, the head of sales had a drinking problem, and the head of HR had a stripper girlfriend on the payroll. The company was late to market with notebooks, and it had to institute a recall on its notebooks which could catch fire in some instances. During that time, Dell hired Bain to do an internal report about how it should change its processes for its new scale – Kevin Rollins of the Bain team knew the business super well and thought incredibly strategically. After the Bain assignment, Rollins joined the company as Vice-chairman, ultimately becoming CEO for a brief period in 2004. One of his first recommendations was to cease its experiment selling through department stores and to stay DTC-focused. During the internet bubble, Dell faced another crisis – its stock had risen precipitously for many years, but once the bubble burst, in a matter of months, it fell from $50 to $17 a share. The company missed its earnings estimates for five quarters in a row and had to do two layoffs – one with 1,700 people and another with 4,000. During this time, an internal poll showed that 50% of Dell team members would leave if another company paid them the same rate. Dell realized that the values statement he had written in 1988 was no longer resonating and needed updating – he refreshed the value statement and focused the company on its role in the global IT economy. Dell understands that you should never waste a great crisis, and always find the opportunity for growth and improvement when things aren't going well.

Business Themes

Carl Icahn and Dell. No one in business represents a corporate nemesis quite like Carl Icahn. Icahn was born in Rockaway, NY, and earned his tuition money at Princeton playing poker against the rich kids. Icahn is an activist investor and popularized the field of activist investing with some big, bold battles against companies in the early 1980s. Icahn got his start in 1968 by purchasing a seat on the New York Stock Exchange. He completed his first major takeover attempt in 1978, and the rest was history. Icahn takes an intense stance against companies, typically around big mergers, acquisitions, or divestitures. He 1) buys up a lot of shares, like 5-10% of a company, 2) accuses the company and usually the management of incompetence or a lousy strategy 3) argues for some action - a sale of a division, a change in management, a special dividend 4) sues the company in a variety of ways around shareholder negligence 5) sends letters to shareholders and the company detailing his findings/claims 6) puts up a new slate of board members at the company 7) waits to profit or gets paid to go away (also called greenmail). Icahn used these exact tactics when he took on Michael Dell. Icahn issued several scathing letters about Dell, criticizing the company's poor performance, highlighting Michael Dell's obvious conflicts of interest as CEO, and demanding the special committee evaluate the deal fairly. Icahn normally makes money when he gets involved, and he is essentially a gnat that doesn't go away until he makes money one way or another. After the fight, Icahn still made a profit of 10s of millions, and his fight with Dell was just beginning.

Take Privates and Transformation. Michael Dell had thought a couple of times about taking the company private when he was approached by Egon Durban of Silver Lake Partners, a large tech private equity firm. Dell and Zender went on a walk in Hawaii and worked out what a transaction might be. The issue with Dell at that time was that the PC market was under siege. People thought tablets were the future, and their questions found confirmation in the PC market's declining volumes. Dell had spent $14B on an acquisition spree, acquiring a string of enterprise software companies, including Quest Software, SonicWall, Boomi, Secureworks, and more, as it redirected its strategy. But these companies had yet to kick into gear, and most of Dell's business was still PCs and servers. The stock price had fallen about 45% since Michael Dell had rejoined as CEO in 2007. Dell had thought about taking the company private a couple of other times, but now seemed like a great time - they needed to transform, and fast. Enacting a transformation in the public markets is tough because wall street focuses on quarter-to-quarter metrics over long-term vision. He first considered the idea in June 2012 when talking with the then largest shareholder Southeastern Asset Management. After letting the idea percolate, Dell held discussions with Silver Lake and KKR. Silver Lake and Dell submitted a bid at $12.70, then $12.90, then $13.25, then $13.60, then $13.65. On February 4th, 2013, the special committee accepted Silver Lake's offer. On March 5th, Carl Icahn entered the fray, saying he owned about $1b of shares. Icahn submitted a half proposal suggesting the company pay a one-time special dividend, he would acquire a substantial part of the stock and it would remain public, under different leadership. On July 18th, the special committee delayed a vote on the acquisition because it became clear that Dell couldn't get enough of the "majority of the minority" votes needed to close the acquisition. A few weeks later, Silver Lake and Dell raised their bid to $13.75 (the original asking price of the committee), and the committee agreed to remove the voting standard, allowing the SL/Dell combo to win the deal. After various lawsuits, Icahn gave up in September 2013, when it became clear he had no strategy to convince shareholders to his side. It was an absolute whirlwind of a deal process, and Dell escaped with his company.

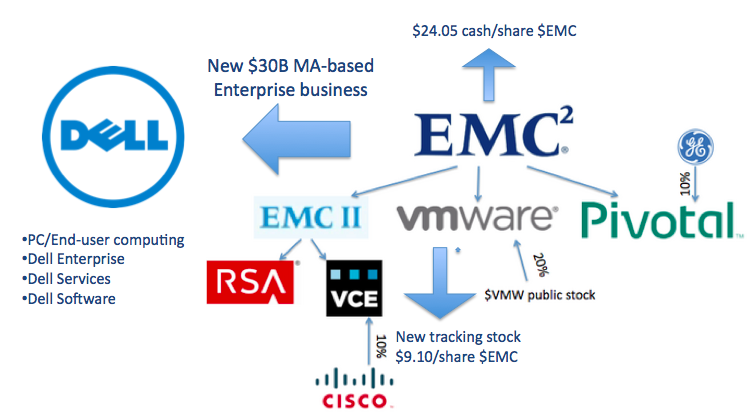

Big Deals. After Dell went private, Michael Dell and Egon Durban started scouring the world for enticing tech acquisitions. They closed on a small $1.4B storage acquisition, which reaffirmed Michael Dell's interest in the storage market. After the deal, Dell reconsidered something that almost happened in 2008/09 – a merger with EMC. EMC was the premier enterprise storage company with a dominant market share. On top of that, EMC owned VMware, a software company that had successfully virtualized the x86 architecture so servers could run multiple operating systems simultaneously. Throughout 2008 and 2009, Dell and EMC had deeply considered a merger – to the point that its boards held joint discussions about integration plans and deal price. The boards scrapped the deal during the financial crisis, and in the ensuing years, EMC grew and grew. By 2014 it was a $59B public company and the largest company in Massachusetts. In mid-2014, Dell started to consider the idea. He pondered the strategic and competitive implications of the deal everywhere he went. Little did he know that he was already late to the party – it later came out that both HP and Cisco had looked at acquiring EMC in 2013. HP got down to the wire, with the deal being championed by Meg Whitman, as a way to move past the Autonomy debacle and board room in-fighting. HP had a handshake agreement to merge with EMC in a 1:1 deal, but at the last minute, HP re-traded and demanded a more advantageous split (i.e. HP would own 55% of the combined company) and EMC said no. When EMC then turned to Dell, Whitman slammed the deal. While the only remaining competitor of size was Dell, there was still a question of how they could finance the deal, especially as a private company. Dell's ultimate package was a pretty crazy mix of considerations: Dell issued a tracking stock related specifically to Dell's business, it then took out some $40b in loans against its newly acquired VMWare equity and the cash flow of Dell's underlying business, Michael Dell and Silver lake also put in an additional $5B of equity capital. After Silver Lake and Dell determined the financing structure, Dell faced a grueling interrogation session in front of the EMC board as final approval for the deal. The deal was announced on October 12th, 2015, and it closed a year later. By all measures, it appears the deal was a success – the company has undergone a complete transformation – shedding some acquired assets, spinning off VMWare, and going public again by acquiring its own tracking stock. Michael Dell took some huge risks - taking his company private and completing the biggest tech merger in history. It seems to have paid off handsomely.