We dive into an investing book that covers the capital cycle. In summary, the best time to invest in a sector is actually when capital is leaving or has left.

Tech Themes

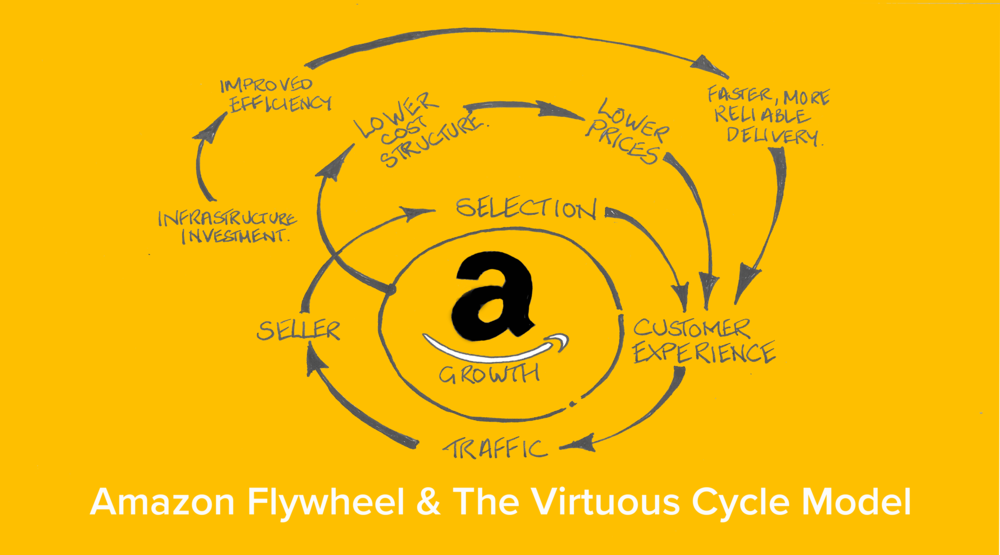

Amazon. Marathon understands that the world moves in cycles. During the internet bubble of the late 1990s the company refused to invest in a lot of speculative internet companies. “At the time, we were unable to justify the valuations of any of these companies, nor identify any which could safely say would still be going strong in years to come.” In August of 2007, however, several years after the internet bubble burst, they noticed Amazon again. Amazon’s stock had rebounded well from the lows of 2001 and was roughly flat from its May 1999 valuation. Sales had grown 10x since 1999 and while they recognized it had a tarnished reputation from the internet bubble, it was actually a very good business with a negative working capital cycle. On top of this, the reason the stock hadn’t performed well in the past few years was because they were investing in two new long-term growth levers, Amazon Web Services and Fulfillment by Amazon. I’m sure Marathon underestimated the potential for these businesses but we can look back now and know how exceptional and genius these margin lowering investments were at the time.

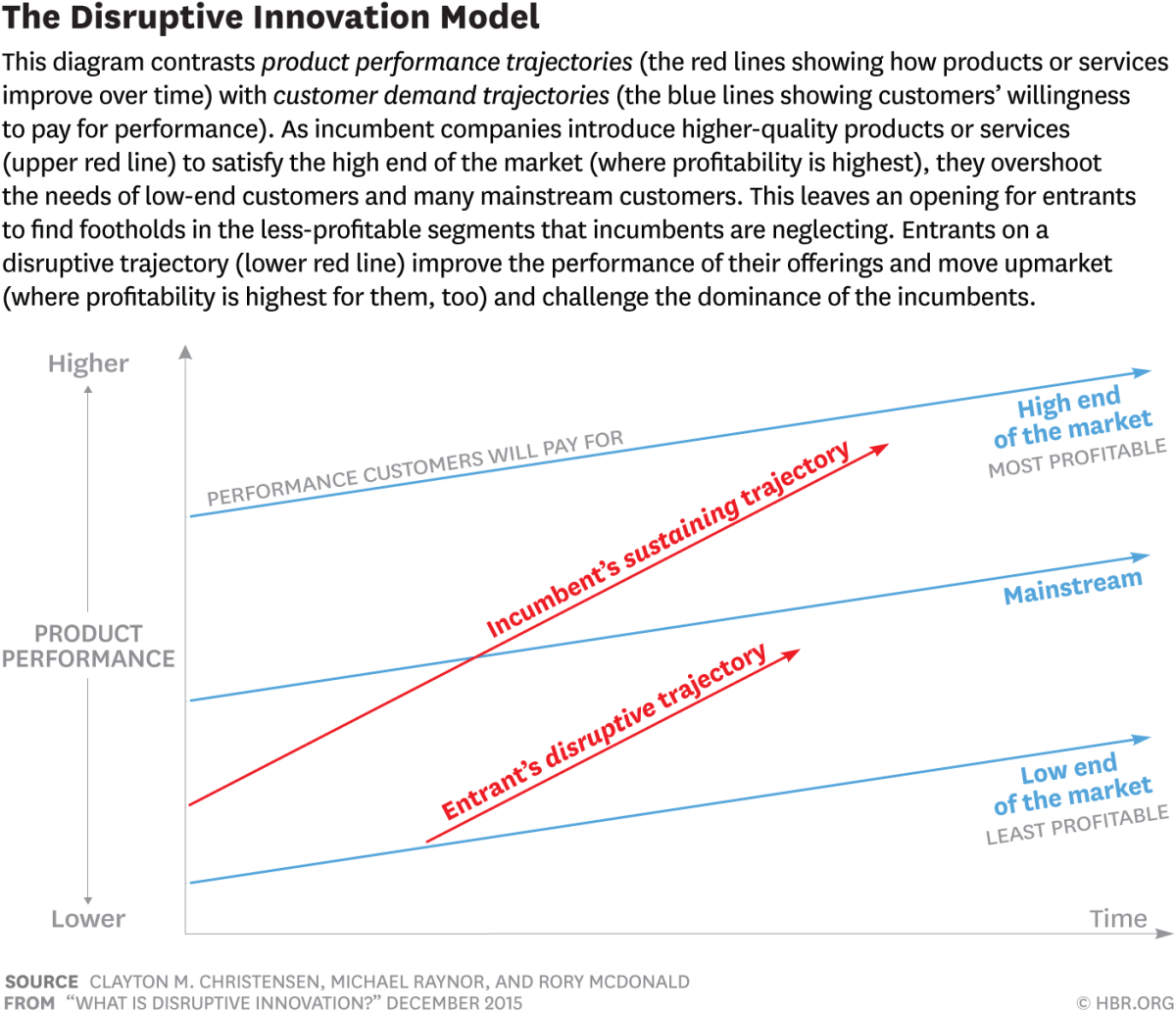

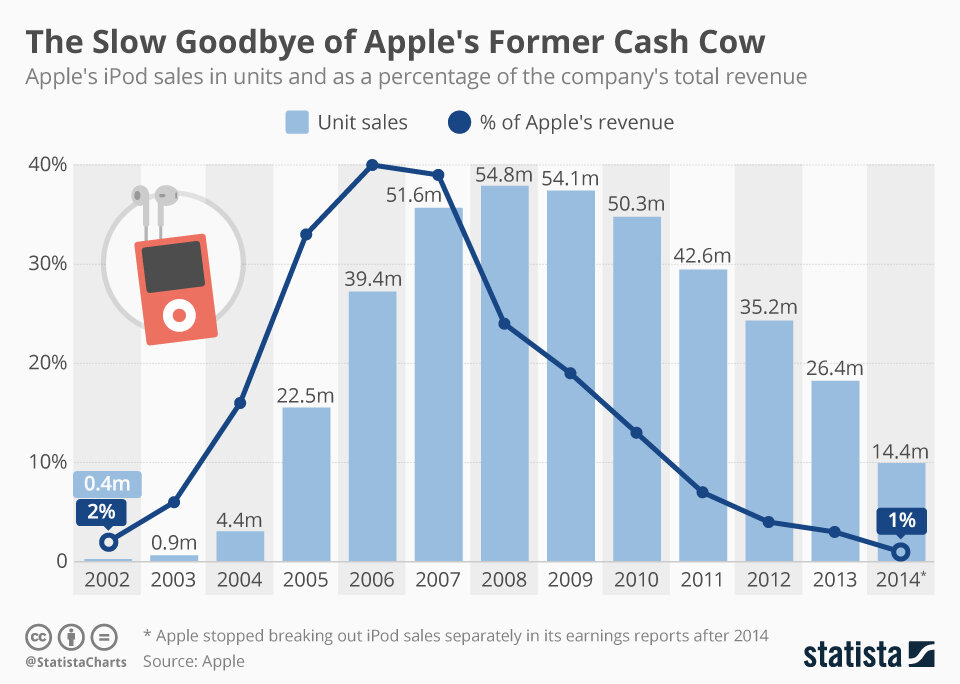

Semis. Nothing paints a more clear picture of cyclicality than semiconductors. Now we can debate whether AI and Nvidia have moved us permanently out of a cycle but up until 2023, Semiconductors was considered cyclical. As Marathon notes: “Driven by Moore’s law, the semiconductor sector has achieved sustained and dramatic performance increases over the last 30years, greatly benefiting productivity and the overall economy. Unfortunately, investors have not done so well. Since inception in 1994, the Philadelphia Semiconductor Index has underperformed the Nasdaq by around 200 percentage point, and exhibited greater volatility…In good times, prices pick up, companies increase capacity, and new entrants appear, generally from different parts of Asia (Japan in the 1970s, Korea in 1980s, Taiwan in the mid1990s, and China more recently). Excess capital entering at cyclical peaks has led to relatively poor aggregate industry returns.” As Fabricated Knowledge points out the 1980s had two brutal Semiconductor cycles. First, in 1981, the industry experienced severe overcapacity, leading to declining prices while inflation ravaged through many businesses. Then in 1985, the US semiconductor business declined significantly. “1985 was a traumatic moment for Intel and the semiconductor industry. Intel had one of the largest layoffs in its history. National Semi had a 17% decrease in revenue but moved from an operating profit of $59 million to an operating loss of -$117 million. Even Texas Instruments had a brutal period of layoffs, as revenue shrank 14% and profits went negative”. The culprit was Japanese imports. Low-end chips had declined significantly in price, as Japan flexed its labor cost advantage. All of the domestic US chip manufacturers complained (National Semiconductor, Texas Instruments, Micron, and Intel), leading to the 1986 US-Japan Semiconductor Agreement, effectively capping Japanese market share at 20%. Now, this was a time when semiconductor manufacturing wasn’t easy, but easier than today, because it focused mainly on more commoditized memories. 1985 is an interesting example of the capital cycle compounding when geographic expansion overlaps with product overcapacity (as we had in the US). Marathon actually preferred Analog Devices, when it published its thesis in February 2013, highlighting the complex production process of analog chips (physical) vs. digital, the complex engineering required to build analog chips, and the low-cost nature of the product. “These factors - a differentiated product and company specific “sticky” intellectual capital - reduce market contestability….Pricing power is further aided by the fact that an analog semiconductor chip typically plays a very important role in a product for example, the air-bag crash sensor) but represents a very small proportion of the cost of materials. The average selling price for Linear Technology’s products is under $2.” Analog Devices would acquire Linear in 2017 for $14.8B, a nice coda to Marathon’s Analog/Linear dual pitch.

Why do we have cycles? If everyone is playing the same business game and aware that markets come and go, why do we have cycles at all. Wouldn’t efficient markets pull us away from getting too hyped when the market is up and too sour when the market is down? Wrong. Chancellor gives a number of reasons why we have a capital cycle: Overconfidence, Competition Neglect, Inside View, Extrapolation, Skewed Incentives, Prisoner’s Dilemma, and Limits to Arbitrage. Overconfidence is somewhat straightforward - managers and investors look at companies and believe they are infallible. When times are booming, managers will want to participate in the boom, increasing investment to match “demand.” In these decisions, they often don’t consider what their competitors are doing, but rather focus on themselves. Competition neglect takes hold as managers enjoy watching their stock tick up and their face be splattered across “Best CEO in America” lists. Inside View is a bit more nuanced, but Michael Mauboussin and Daniel Kahneman have written extensively on it. As Kahneman laid out in Thinking, Fast & Slow: “A remarkable aspect of your mental life is that you are rarely stumped … The normal state of your mind is that you have intuitive feelings and opinions about almost everything that comes your way. You like or dislike people long before you know much about them; you trust or distrust strangers without knowing why; you feel that an enterprise is bound to succeed without analyzing it.” When you take the inside view, you rely exclusively on your own experience, rather than other similar situations. Instead, you should take the outside view and assume your problem/opportunity/case is not unique. Extrapolation is an extremely common driver of cycles, and can be seen all across the investing world after the recent COVID peak. Peloton, for example, massively over-ordered inventory extrapolating out pandemic related demand trends. Skewed incentives can include near-term EPS targets (encourages buybacks, M&A), market share preservation (encourages overinvestment), low cost of capital (buy something with cheap debt), analyst expectations, and champion bias (you’ve decided to do something and its no longer attractive, but you do it anyway because you got people excited about it). The Prisoner’s Dilemma is also a form of market share preservation/expansion, when your competitor may be acting much more aggressively and you have to decide whether its worth the fight. Limits to Arbitrage is almost an extension of career risk, in that, when everyone owns an overvalued market, you may actually hurt your firm by actively withholding even if it makes investment sense. That’s why many firms need to maintain a low tracking error against indexes, which can naturally result in concentrations in the same stocks.

Business Themes

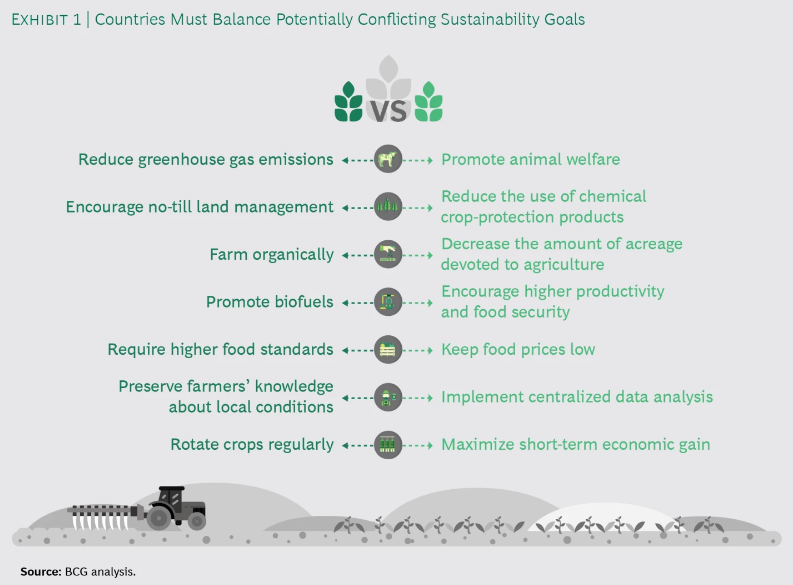

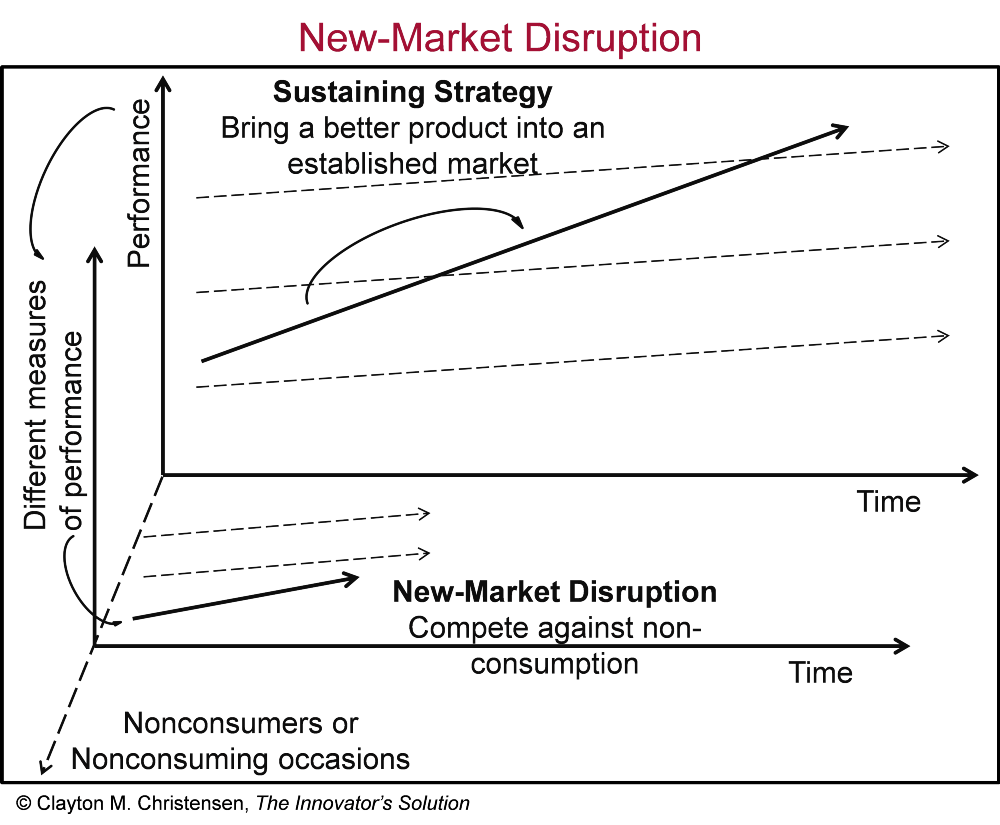

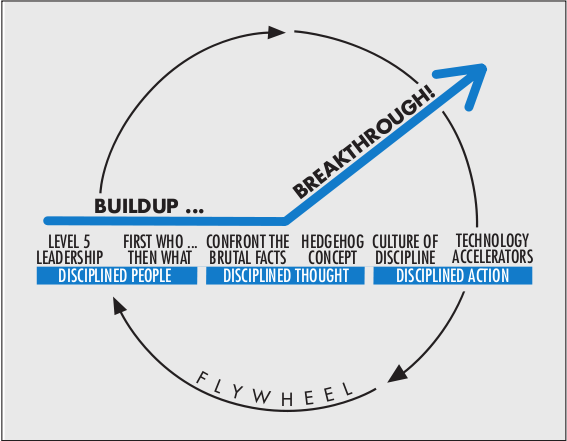

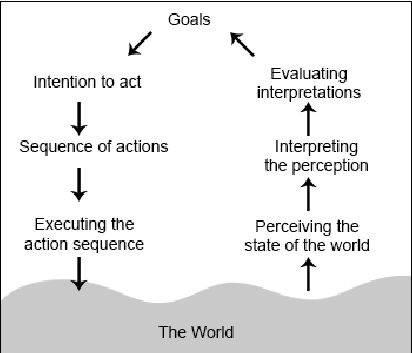

Capital Cycle. The capital cycle has four stages: 1. New entrants attracted by prospect of high returns: investor optimistic 2. Rising competition causes returns to fall below cost of capital: share price underperforms 3. Business investment declines, industry consolidation, firms exit: investors pessimistic 4. Improving supply side causes returns to rise above the cost of capital: share price outperforms. The capital cycle reveals how competitive forces and investment behavior create predictable patterns in industries over time. Picture it as a self-reinforcing loop where success breeds excess, and pain eventually leads to gain. Stage 1: The Siren Song - High returns in an industry attract capital like moths to a flame. Investors, seeing strong profits and growth, eagerly fund expansions and new entrants. Optimism reigns and valuations soar as everyone wants a piece of the apparent opportunity. Stage 2: Reality Bites - As new capacity comes online, competition intensifies. Prices fall as supply outpaces demand. Returns dip below the cost of capital, but capacity keeps coming – many projects started in good times are hard to stop. Share prices begin to reflect the deteriorating reality. Stage 3: The Great Cleansing - Pain finally drives action. Capital expenditure is slashed. Weaker players exit or get acquired. The industry consolidates as survivors battle for market share. Investors, now scarred, want nothing to do with the sector. Capacity starts to rationalize. Stage 4: Phoenix Rising - The supply-side healing during the downturn slowly improves industry economics. With fewer competitors and more disciplined capacity, returns rise above the cost of capital. Share prices recover as improved profitability becomes evident. But this very success plants the seeds for the next cycle. The genius of understanding this pattern is that it's perpetual - human nature and institutional incentives ensure it repeats. The key is recognizing which stage an industry is in, and having the courage to be contrarian when others are either too optimistic or too pessimistic.

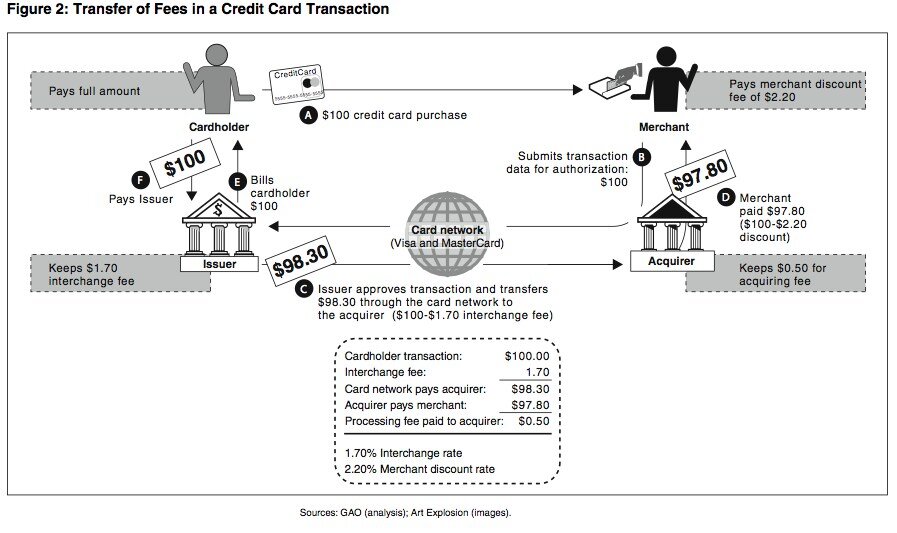

7 signs of a bubble. Nothing gets people going more than Swedish Banking in the 2008-09 financial crisis. Marathon called out its Seven Deadly Sins of banking in November 2009, utilizing Handelsbanken as a positive reference, highlighting how they avoided the many pitfalls that laid waste to their peers. 1. Imprudent Asset-Liability mismatches on the balance sheet. If this sounds familiar, its because its the exact sin that took down Silicon Valley Bank earlier this year. As Greg Brown lays out here: “Like many banks, SVB’s liabilities were largely in the form of demand deposits; as such, these liabilities tend to be short term and far less sensitive to interest rate movement. By contrast, SVB’s assets took the form of more long-term bonds, such as U.S. Treasury securities and mortgage-backed securities. These assets tend to have a much longer maturity – the majority of SVB’s assets matured in 10 years or more – and as a result their prices are much more sensitive to interest rate changes. The mismatch, then, should be obvious: SVB was taking in cash via short-term demand deposits and investing these funds in longer-term financial instruments.” 2. Supporting asset-liability mismatches by clients. Here, Chancellor calls out foreign currency lending, whereby certain European banks would offer mortgages to Hungarians in swiss francs, to buy houses in Hungary. Not only were these banks taking on currency risk, they were exposing their customers to it and many didn’t hedge the risk out appropriately. 3. Lending to “Can’t Pay, Won’t Pay” types. The financial crisis was filled with banks lending to subprime borrowers. 4. Reaching for growth in unfamiliar areas. As Marathon calls out, “A number of European banks have lost billions investing in US subprime CDOs, having foolishly relied on “experts” who told them these were riskless AAA rated credits.” 5. Engaging in off-balance sheet lending. Many European banks maintained "Structured Investment Vehicles” that were off-balance sheet funds holding CDOs and MBSs. At one point, it got so bad that Citigroup tried the friendship approach: “The news comes as a group of banks in the U.S. led by Citigroup Inc. are working to set up a $100 billion fund aimed at preventing SIVs from dumping assets in a fire sale that could trigger a wider fallout.” These SIVs held substantial risk but were relatively unknown to many investors. 6. Getting sucked into virtuous/vicious cycle dynamics. As many European banks looked for expansion, they turned to lending into the Baltic states. As more lenders got comfortable lending, GDP began to grow meaningfully, which attracted more aggressive lending. More banks got suckered into lending in the area to not miss out on the growth, not realizing that the growth was almost entirely debt fueled. 7. Relying on the rearview mirror. Marathon points out how risk models tend to fail when the recent past has been glamorous. “In its 2007 annual report, Merrill Lunch reported a total risk exposure - based on ‘a 95 percent confidence interval and a one day holding period’ - of $157m. A year later, the Thundering Herd stumbled into a $30B loss!”

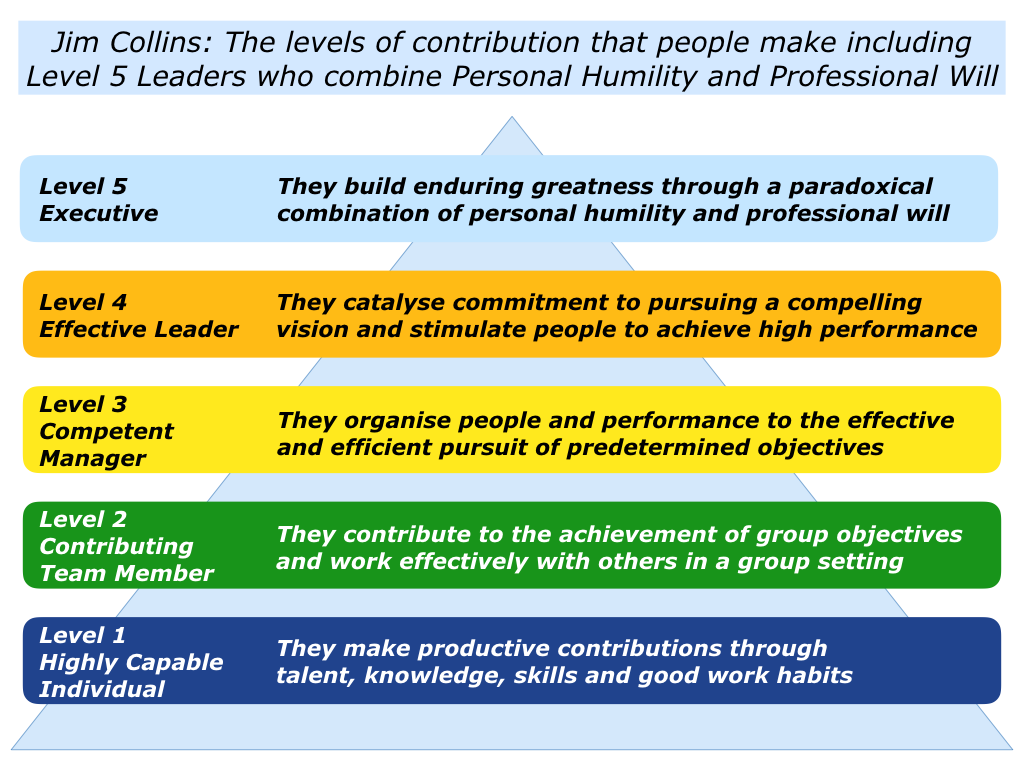

Investing Countercyclically. Björn Wahlroos exemplified exceptional capital allocation skills as CEO of Sampo, a Finnish financial services group. His most notable moves included perfectly timing the sale of Nokia shares before their collapse, transforming Sampo's property & casualty insurance business into the highly profitable "If" venture, selling the company's Finnish retail banking business to Danske Bank at peak valuations just before the 2008 financial crisis, and then using that capital to build a significant stake in Nordea at deeply discounted prices. He also showed remarkable foresight by reducing equity exposure before the 2008 crisis and deploying capital into distressed commercial credit, generating €1.5 billion in gains. Several other CEOs have demonstrated similar capital allocation prowess. Henry Singleton at Teledyne was legendary for his counter-cyclical approach to capital allocation. He issued shares when valuations were high in the 1960s to fund acquisitions, then spent the 1970s and early 1980s buying back over 90% of Teledyne's shares at much lower prices, generating exceptional returns for shareholders. As we saw in Cable Cowboy, John Malone at TCI (later Liberty Media) was masterful at using financial engineering and tax-efficient structures to build value. He pioneered the use of spin-offs, tracking stocks, and complex deal structures to maximize shareholder returns while minimizing tax impacts. Tom Murphy at Capital Cities demonstrated exceptional discipline in acquiring media assets only when prices were attractive. His most famous move was purchasing ABC in 1985, then selling the combined company to Disney a decade later for a massive profit. Warren Buffett at Berkshire Hathaway has shown remarkable skill in capital allocation across multiple decades, particularly in knowing when to hold cash and when to deploy it aggressively during times of market stress, such as during the 2008 financial crisis when he made highly profitable investments in companies like Goldman Sachs and Bank of America. Jamie Dimon at JPMorgan Chase has also proven to be an astute capital allocator, particularly during crises. He guided JPMorgan through the 2008 financial crisis while acquiring Bear Stearns and Washington Mutual at fire-sale prices, significantly strengthening the bank's competitive position. D. Scott Patterson has shown excellent capital allocation skills at FirstService. He began leading FirstService following the spin-off of Colliers in 2015, and has compounded EBITDA in the high teens via strategic property management acquistions coupled with large platforms like First OnSite and recently Roofing Corp of America. Another great capital allocator is Brad Jacobs. He has a storied career building rollups like United Waste Systems (acquired by Waste Services for $2.5B), United Rentals (now a $56B public company), XPO logistics which he separated into three public companies (XPO, GXO, RXO), and now QXO, his latest endeavor into the building products space. These leaders share common traits with Wahlroos: patience during bull markets, aggression during downturns, and the discipline to ignore market sentiment in favor of fundamental value. They demonstrate that superior capital allocation, while rare, can create enormous shareholder value over time.

Dig Deeper