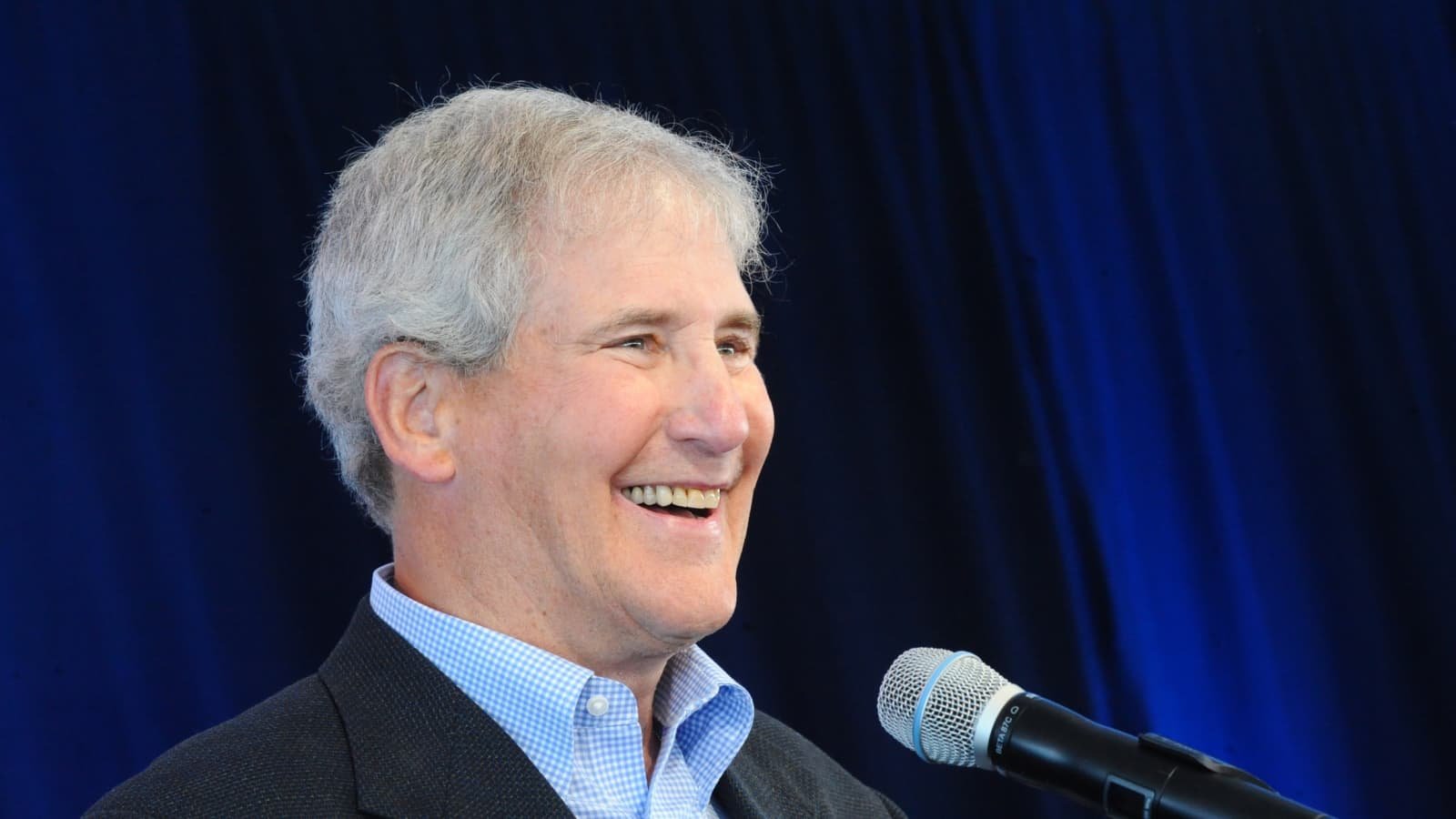

This month we read a book about famous CEO and executive coach, Bill Campbell. Bill had an unusual background for a silicon valley legend: he was a losing college football coach at Columbia. Despite a late start to his technology career, Bill’s timeless leadership principles and focus on people are helpful for any leader at any size company.

Tech Themes

Product First. After a short time at Kodak, Bill realized the criticality of supporting product and engineering. As a football coach, he was not intimately familiar with the intricacies of photographic film. Still, Campbell understood that the engineers ultimately determined the company's fate. After a few months at Kodak, Bill did something that no one else ever thought of - he went into the engineering lab and started talking to the engineers. He told them that Fuji was hot on Kodak's heels and that the company should try to make a new type of film that might thwart some competitive pressure. The engineers were excited to hear feedback on their products and learn more about other aspects of the business. After a few months of gestation, the engineering team produced a new type of film: "This was not how things worked at Kodak. Marketing guys didn't go talk to engineers, especially the engineers in the research lab. But Bill didn't know that, or if he did, he didn't particularly care. So he went over to the building that housed the labs, introduced himself around, and challenged them to come up with something better than Fuji's latest. That challenge helped start the ball rolling on the film that eventually launched as Kodacolor 200, a major product for Kodak and a film that was empirically better than Fuji's. Score one for the marketing guy and his team!" Campbell understood that product was the heart of any technology company, and he sought to empower product leaders whenever he had a chance.

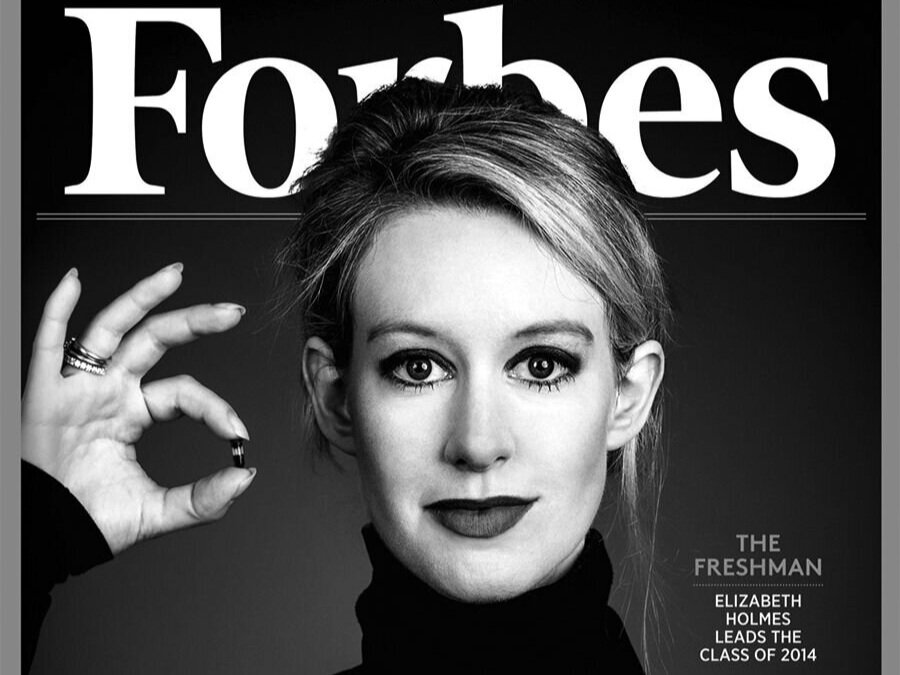

Silicon Valley Moments. Sometimes you look back at a person's career and wonder how they managed to be at the center of several critical points in tech history. Bill was a magnet to big moments. After six unsuccessful years as coach of Columbia's football team, Bill joined an ad agency and eventually made his way to the marketing department at Kodak. At the time, Kodak was a blockbuster success and lauded as one of the top companies in the world. However, the writing was on the wall, film was getting cheaper and cheaper, and digital was on the rise. After a few years, Bill was recruited to Apple by John Sculley. Bill joined in 1983 as VP of Marketing, just two years before Steve Jobs would famously leave the company. Bill was incessant that management try to keep Jobs. Steve would not forget his loyalty, and upon his return, Jobs named Campbell a director of Apple in 1997. Bill became CEO of Claris, an Apple software division that functioned as a separate company. In 1990, when Apple signaled it would not spin Claris off into a separate company, Bill left with the rest of management. After a stint at Intuit, Bill became a CEO coach to several Silicon Valley luminaries, including Eric Schmidt, Steve Jobs, Shellye Archambeau, Brad Smith, John Donahoe, Sheryl Sandberg, Jeff Bezos, and more. Bill helped recruit Sandberg and current CFO Ruth Porat to Google. Bill was a serial networker who stood at the center of silicon valley.

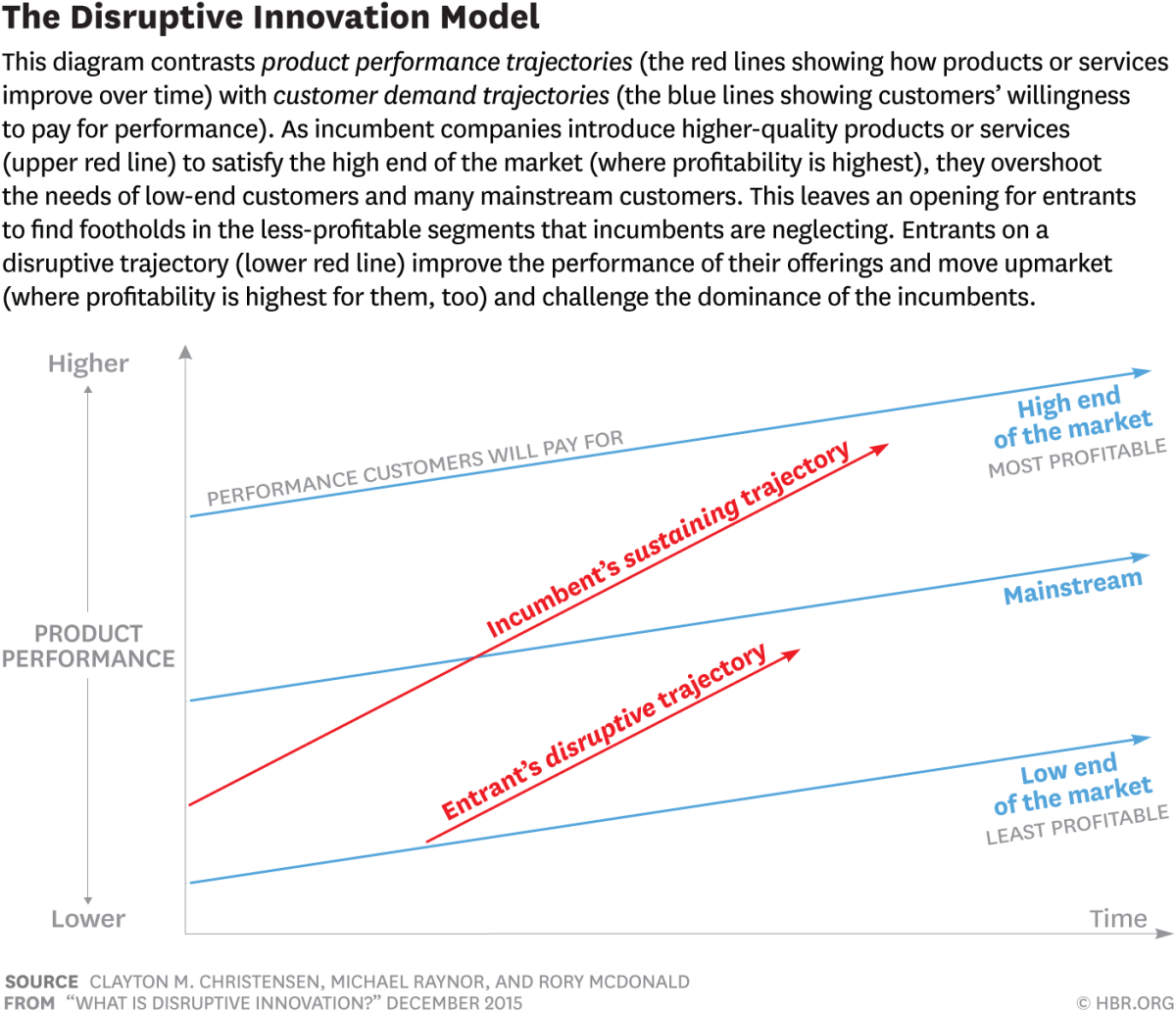

Failure and Success. Following his departure from Claris/Apple, Bill founded Go Corporation, one of the first mobile computers. The company raised a ton of venture capital for the time ($75m) before an eventual fire-sale to AT&T. The idea of a mobile computer was compelling, but the company faced stiff competition from Microsoft and Apple's Newton. Beyond competition, the original handheld devices lacked very basic features (easy internet, storage, network and email capabilities) that would be eventually be included in Apple's iPhone. Sales across the industry were a disappointment, and AT&T eventually shut down the acquired Go Corp. After the failure of Go. Corporation, Bill was unsure what to do. John Doerr, the famous leader of Kleiner Perkins, introduced Bill to Intuit founder Scott Cook. Cook was considering retirement and looking for a replacement. Bill met with Cook, but Cook remained unimpressed. It was only after a second meeting where Bill shared his philosophy on management and his focus on people that Cook considered Campbell for the job. Bill joined Intuit as CEO and went on to lead the company until 1998, after which he became Chairman of the board, a position he held until 2016. Within a year of Campbell joining, Microsoft agreed to purchase the company for $1.5b. However, the Justice Department raised flags about the acquisition, and Microsoft called off the deal in 1995. Campbell continued to lead the company to almost $600M of revenue. When he retired from the board in 2016, the company was worth $30B.

Business Themes

Your People Make You a Leader. Campbell believed that people were the most crucial ingredient in any successful business. Leadership, therefore, was of utmost importance to Bill. Campbell lived by a maxim passed by former colleague Donna Dubinsky: "If you're a great manager, your people will make you a leader. They acclaim that, not you." In an exchange with a struggling leader, Bill added to this wisdom: "You have demanded respect, rather than having it accrue to you. You need to project humility, a selflessness, that projects that you care about the company and about people." The humility Campbell speaks about is what John Collins called Level 5 leadership (covered in our April 2020 book, Good to Great). Research has shown that humble leaders can lead to higher performing teams, better flexibility, and better collaboration.

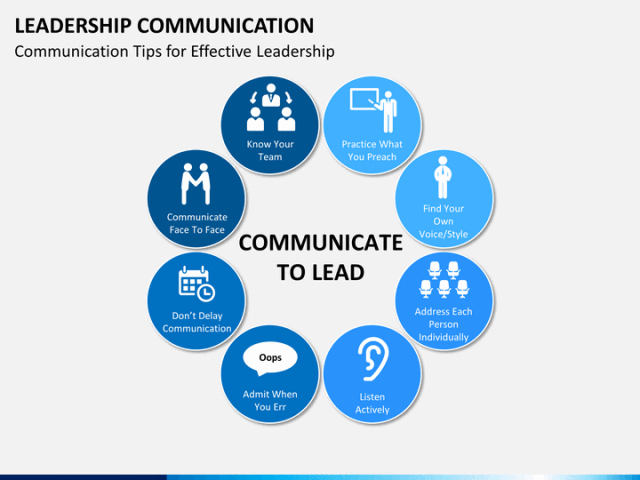

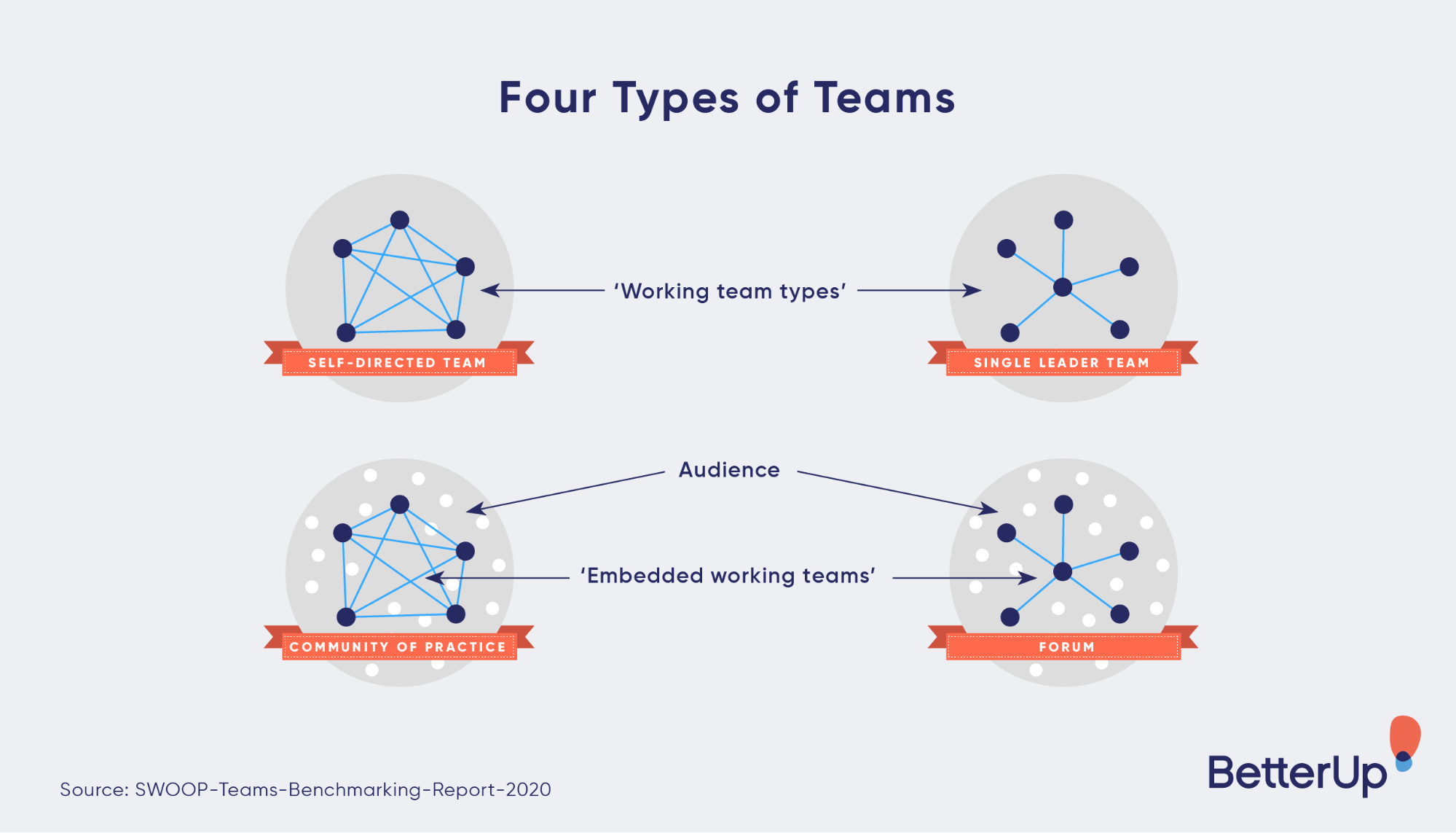

Teams Need Coaches. Campbell loved to build community. Every year he would plan a trip to the super bowl, where he would find a bar and set down roots. He'd get to know the employees, and after a few days, he was a regular at the bar. He understood how important it was to build teams and establish a community that engendered trust and psychological safety. Every team needs a good coach, and Campbell understood how to motivate individuals, give authentic feedback, and handle interpersonal conflicts. "Bill Campbell was a coach of teams. He built them, shaped them, put the right players in the right positions (and removed the wrong players from the wrong positions), cheered them on, and kicked them in their collective butt when they were underperforming. He knew, as he often said, that 'you can't get anything done without a team.'" After a former colleague left to set up a new private equity firm, Bill checked out the website and called him up to tell him it sucked. As part of this feedback style, Bill always prioritized feedback in the moment: "An important component of providing candid feedback is not to wait. 'A coach coaches in the moment,' Scott Cook says. 'It's more real and more authentic, but so many leaders shy away from that.' Many managers wait until performance reviews to provide feedback, which is often too little, too late."

Get the Little Things Right. Campbell understood that every interaction was a chance to connect, help, and coach. As a result, he thought deeply about maximizing the value out of every meeting: "Bill took great care in preparing for one-on-one meetings. Remember, he believed the most important thing a manager does is to help people be more effective and to grow and develop, and the 1:1 is the best opportunity to accomplish that." Meetings with Campbell frequently started with family and life discussions and would move back and forth between business and the meaning of life - deep sessions that made people think, reconsider what they were doing and come back energized for more. He also was not shy about addressing issues and problems: "There was one situation we had a few years ago where two different product leaders were arguing about which team should manage a particular group of products. For a while, this was treated as a technical discussion, where data and logic would eventually determine which way to go. But that didn't happen, the problem festered, and tensions rose. Who was in control? This is when Bill got involved. There had to be a difficult meeting where one exec would win and the other would lose. Bill made the meeting happen; he spotted a fundamental tension that was not getting resolved and forced the issue. He didn't have a clear opinion on how to resolve the matter, on which team the product belonged, he simply knew we had to decide one way or another, now. It was one of the most heated meetings we've had, but it had to happen." Bill extended this practice to email where he perfected concise and effective team communication. On top of 1:1's, meetings, and emails, Campbell stayed on top of messages: ""Later, when he was coach to people all over the valley, he spent evenings returning the calls of people who had left messages throughout the day. When you left Bill a voice mail, you always got a call back." Bill was a master of communication and a coach to everyone he met.